I’m sharing another in progress pattern from the Agile Codelines Pattern Lanuage. In it I’m trying to capture the tradeoffs involved in getting the right kind of feedback in the right time frame. It sets the stage for some of the more “controversial” patterns like Pull Request and Automated Checks.

This is a Updated Version of this Post

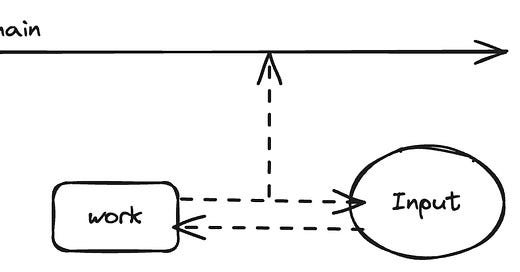

When developing on Task Branch and quickly moving work to the Main Line, changes affect the entire team, so you want to avoid breaking things. Tests help to the extent that they test the right things, so feedback from others on approach, style, design, and testing approach is valuable. This pattern describes a way to get prompt and useful feedback from team members before you integrate changes into the Main Line.

Balancing Feedback, Learning, Speed, and Quality

Good feedback is prompt, relevant, and actionable. Review processes often have gates that, on the surface, seem like useful safety checks but can often be more disruptive than valuable. Human feedback can identify issues and solutions that automated feedback cannot. Not all changes require the same level of review.

You want to integrate changes rapidly, maintain a working codeline, and minimize technical debt, and eliminate accidental technical debt. Tests are an important part of this, and test coverage metrics can give you some confidence, but you need a way to ensure the tests cover the right things. Code review mechanisms can identify missed requirements and ineffective tests. And tests don’t identify every issue.

Software development is a human, collaborative process, and developers can learn much from each other. However, human interaction can be slow, both because of different demands on time and because human interactions have overhead in terms of time and context switching.

A fail-fast approach — where you integrate code with minimal human feedback and fix problems as they arise —-can work. But every error disrupts the team, and not all errors are detected promptly, causing business risk. The later in the pipeline errors are found, the more costly they are to fix.

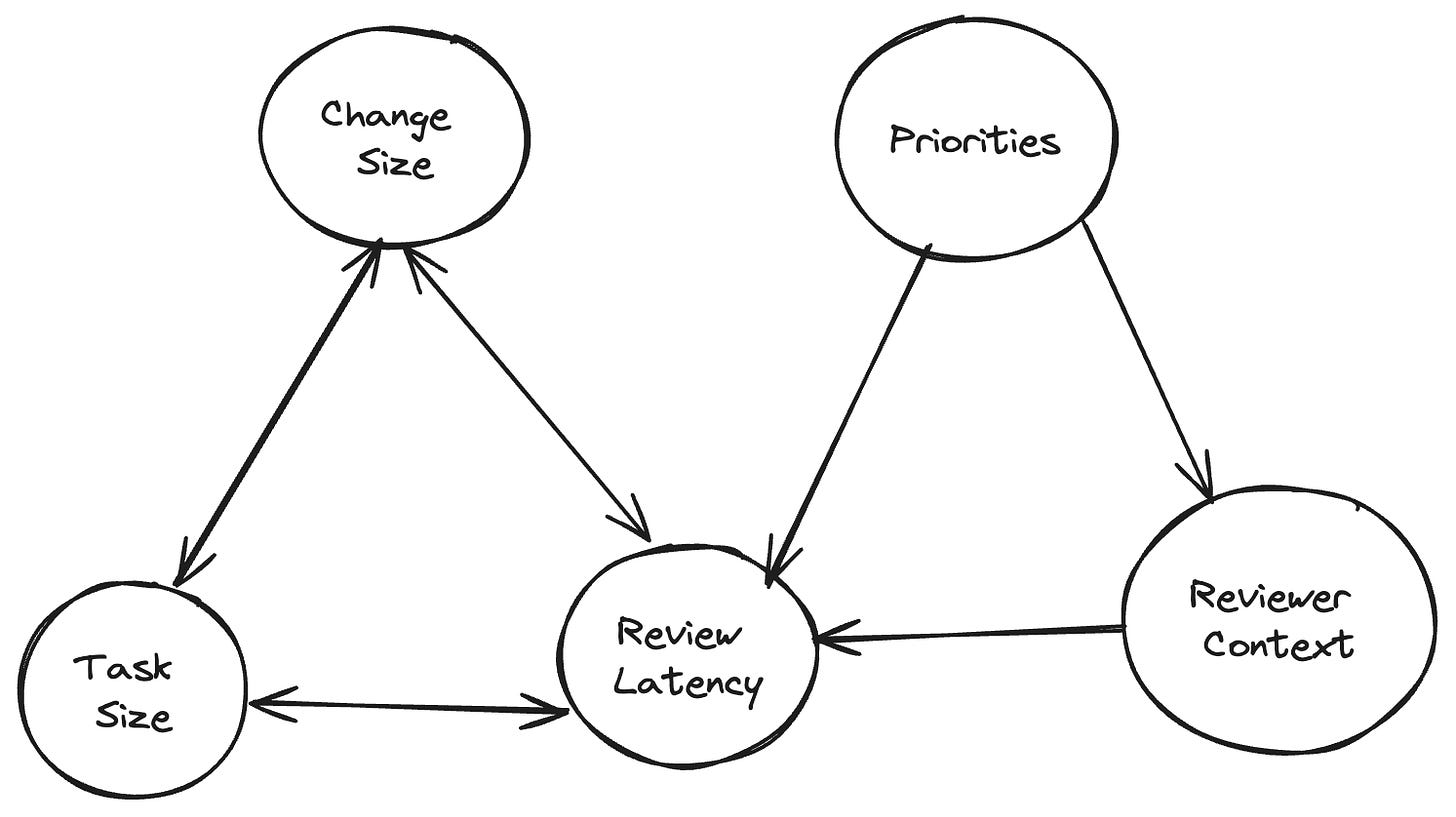

Feedback from other developers can help, but getting feedback can be slow if the person giving the feedback can’t review promptly or lacks the full context for the change. Pair Programming can address many problems around context and responsiveness, but it may not work for every team, and a broader perspective on a problem can identify incorrect assumptions that a pair co-creating code might have missed.

When the time to get feedback in the form of a code review is long, the delay can lead to a context switching cost or potentially a merge conflict, slowing down the team. Not getting feedback can lead to tunnel vision about the solution. There are ways to mitigate the cost of waiting for feedback, but they all have context-switching costs. You might ask a particular team member for feedback since they may have expertise or context, but that person becomes a critical path constraint. Opening up reviews to the whole team could make it more likely that someone can review promptly, but that comes with risks:

Spreading responsibility too broadly can reduce urgency because of a sense that “someone else will do it.”

Not everyone will have all the context to give good feedback.

While any skilled developer with a basic understanding of the domain and technology stack can provide feedback on code, the most valuable, effective feedback often comes from those familiar with the code base, the project, and the goals of a given unit of work. While part of the value of code reviews is shared understanding and knowledge transfer, there is a time and place for each kind, and a pre-commit code review is not the best time to revisit alternatives (unless new information has emerged).

When developers lack context or domain knowledge, or the change set is too large, reviews may be cursory and of little value.

You want code review feedback to be more than just a box to check, but something that is valuable and helps to foster a collaborative dynamic.

Relevant, Timely, and Actionable Feedback

Before merging code from a Task Branch into the Main Line get feedback from another developer. Identify practices that ensure that the feedback is timely and that the reviewer has the appropriate context. Acknowledge the cost-benefit for some trivial changes where cursory reviews might be sufficient.

Good Code Reviews are a form of collaboration that can:

Reduce error

Helps the code improve.

Lead to a shared understanding of the code base.

Feedback that achieves these goals is:

Relevant: Not just general comments about coding style but comments that take design conversations and the goal of the work into account.

Timely: Feedback is shared shortly after the code is ready for review, and questions are answered promptly.

Actionable: Feedback gives enough information to allow the developer to act on it.

Relevant feedback addresses the code’s context, including requirements, design discussions, etc. General programming feedback about style and related issues is useful, but Automated Checks are often better for that sort of feedback. The more context the reviewer has about the problem, the solution, and the design, the easier it is to be relevant. While a Team Focus might mean that anyone on the team can provide this kind of feedback, another developer who was engaged in the design and coding process is often best suited to review a change. Pair programming or having a Feature Partner can make this easier.

Timely feedback is sufficiently prompt so that the developer does not lose context because of time spent waiting. Timeliness does not mean “immediate,” though sooner is often better. The definition of “timeliness” should be part of a team’s working agreements.

Actionable feedback is as specific as possible and suggests specific actions to take or questions to discuss. Vague comments about concerns or errors slow down the process.

Code Review feedback can be delivered either interactively or asynchronously. Interactive feedback, where code is discussed in real-time, can take any number of forms:

Pair Programming

Desk Review with a Feature Partner.

An interactive review after sharing a Pull Request

Synchronous feedback doesn’t preclude some preliminary review and comment; much like preparing for a meeting, taking a few minutes to review code “offline” can make for a better review, as long as this internal is short.

Asynchronous Feedback is often associated with a Pull Request Process, though it can occur in other ways too. Asynchronous feedback can make sense for:

Larger changes

Trivial Changes, where feedback with comments is likely to be sufficient

Distributed teams, where there is a working agreement setting out expectations and commitments about timeliness.

Even when feedback is asynchronous, getting it promptly is still important, and teams should consider having interactive sessions to discuss unclear items.

Remember:

The goal is to improve the work. Yes, you want to identify problems, but you also want to suggest solutions.

The participants are all creators; if you give feedback now, you may get feedback later.

The author owns the work and decides how to process the input, subject to fulfilling the requirements, the team norms, and general design and implementation guidelines. A code review is not the time to impose personal preferences on the code, though it could be a time to identify gaps in guidelines.

If your process requires “approval” before merging and feedback is asynchronous, err on the side of permissive approval (“approved pending these changes”, for example). Permissive approval avoids delays and demonstrates trust in team members.

Review Effort (and Skipping Review)

When taking the time to review code, consider the risk of a change. The main value of software comes when it is integrated. Peer feedback is valuable, but it also comes at a cost. Some tools can help you gauge the risk of changes, and some changes might be OK to merge when all automated checks pass — without a peer review,

Metrics

Some metrics to track to help you identify bottlenecks:

Time to first comment

Time between interactions

Time from Code Completion to merge.

Using a Pull Request mechanism makes it easier to collect this data, though it is still relevant for any feedback process.

Cautions

If the team loses track of the purpose of Code Review—collaboration—it can easily become a bureaucratic, low-value process.

In particular, avoid the following traps:

Having only senior people do reviews. You want the reviewers to know the code and the problem, and initially, that might be the more experienced people, but the choice is about skill and knowledge, not status.

Treating code reviews as “evaluations.” The goal is to improve the code. If a pattern emerges that leads to the thought that someone needs coaching, address that in another context, ideally with an “elevate the person’s skills” frame.

Having reviews be gates. If you have a team dynamic where someone will blithely ignore show-stopper issues, this points to larger team dynamics issues. If you must have gates, make them part of your automated tests.

While speed is important, do not skip steps in favor of speed. If the feedback cycle is taking too long, work to improve the process.

When gathering metrics, use them for understanding rather than evaluation. If the metrics don’t look good, the reasons for that might be out of the control of individuals, yet easy to fix.

Next Steps

Facilitate relevant (and timely) feedback by ensuring a Team Focus so that Team members can feel comfortable prioritizing reviews and finishing work in progress over any individual goals. Avoid emphasizing individual tasks over larger goals.

Use Automated Checks to catch mechanical issues with the code so that team members can focus on design and purpose.

Make sure there is a reviewer with good context, such as a Feature Partner who understands the product scope and technical design.

Enable automation and support remote team members using a Pull Request.

Smaller tasks are easier to give good, prompt feedback on. Enable timely feedback with Small Development Tasks

Moving to Better Code Reviews

It’s easy to get caught up in discussions about tools and the code review process itself when trying to improve it, but most of the challenges with code reviews come down to:

Lack of clarity about why you are doing them.

Lack of working agreements/culture that supports those rules.

A good code review is about sharing knowledge and Identifying gaps that tools can’t find. It won’t be perfect, and taking longer in the name of perfection often yields worse outcomes than doing a quicker (or no) check and fixing any problems, as long as you later strive to identify what the issues were.